NY's Digital Bills Could Make Things Worse For Teens

Nobody wants their children to see hate speech and violence online. But by prohibiting the use of algorithms to sort content on online platforms like YouTube, Instagram, and TikTok, New York’s S7694 and A8148 could make feeds worse.

Instead of giving teenagers a healthier online experience, New York’s bills could prevent platforms from ensuring age-appropriate feeds for teens. It could mean that whoever posts most recently goes to the top of a teen’s feed – even if that post is spam, hate speech, or other harmful content.

In seeking to help teenagers, the bills’ requirements could end up harming at-risk teens or invading their privacy. And the bills as written are likely to face the same legal challenges that have halted similar state bills.

Mandates Chronological Feeds, Which Are Not the Answer

The bills require platforms to offer purely chronological feeds by the default — but numerous studies and commentators have found that chronological feeds are worse.

Whistleblower Frances Haugen revealed an internal Facebook test of chronological-only feeds, finding that:

“Meaningful Social Interactions — the back and forth comments between friends […] — dropped by 20%.”

Users “hid 50% more posts, indicating they weren’t thrilled with what they were seeing.”

“People spent more time scrolling through the News Feed searching for interesting stuff, and saw more advertisements as they went” – increasing Facebook’s ad revenue

Users “saw double the amount of posts from public pages they don’t follow, often because friends commented on those pages.”

In a separate study of Facebook, “users served the reverse chronological feed…encountered more political and untrustworthy content on Facebook and Instagram than users with the standard feed.” – specifically a 68% increase in ‘untrustworthy’ content.

TechDirt commented that “this leaked research pokes a pretty big hole in the idea that getting rid of algorithmic recommendations does anything particularly useful.”

Platformer’s Casey Newton concluded, “I’ve seen no evidence that forcing a return to chronological feeds would address any policy aim in particular. In short, it feels like vibes-based regulation.”

And academic researchers have found that algorithmically-curated feeds “help consumers experience better content.”

Could Promote Toxic Posts Over Healthy Content

If a teen shows interest in healthy content – like journalism, sports figures, or book trends – online platforms can be a place to nurture that spark and build community with peers who share the same interest. But New York’s digital media bills prohibit online platforms from showing teens a feed with relevant content by default.

This bill could punish young people searching for better online experiences. For example, teenagers interested in applying to college might visit university pages or an SAT prep group. Not only could this bill’s ban on algorithms prevent them from seeing tailored content based on their interactions, but it could also make it harder for platforms to keep toxic content like self-harm and eating disorder content out of their feeds.

Algorithmically curated feeds can protect users from harassment and cyberbullying. Unfortunately, New York’s digital media bills could require platforms to display cyberbullying from classmates in a reverse chronological feed (§1500(1)(e)).

Content curation allows platforms to downrank and sometimes remove unwanted interactions like coordinated racial or gender-based harassment. Instead of ensuring the internet is a positive place where young people can thrive, the bill could strip platforms of their ability to protect users altogether.

Could Prevent Age-Appropriate Design of Online Services

Online services are working hard to design age-appropriate services for teenagers, particularly younger teens. Online platforms use algorithms to provide a different experience for a thirteen-year-old than the experience they provide for a seventeen-year-old. Just like movie ratings restrict access to films depending on the age of a minor, algorithms tailor content by age. New York’s digital media bills would bar technology platforms from curating social media feeds by default, forbidding services from tailoring content to younger teens based on age inference. For example:

Instagram announced that it would be stricter about what types of content it recommends to 13 to 18-year-olds. In 2021, Instagram started steering teens who are searching for disordered eating topics toward helpful support resources. Snapchat algorithmically highlights resources, including hotlines for help, if teenagers encounter sexual risks, like catfishing or financial extortion.

This bill could likely break these tools, which rely on algorithms to sift through posts and downrank harmful content.

Could Threaten a Refuge For At-Risk Teens

S. 7694 and A. 8148 include provisions that make teen access to online resources contingent on parental consent. For many teens – including those from abusive families and LGBTQ+ teens with unsupportive parents – online communities are a refuge. Under the bill, online services couldn’t help a teenager interested in coming-out-guides, bullying prevention, or dealing with family abuse – unless their parents okay it. And even in the most supportive households, the requirement for verifiable consent further escalates privacy risks, as it necessitates the processing of personal information of both the parent and the teen.

Writing in the New York Daily News, representatives from the Brooklyn Community Pride Center and New Immigrant Community Empowerment (NICE) warned of this risk and the dangers that come with creating barriers to accessing critical — and sometimes life-saving — information.

“The wrong kind of legislation would not only stop these kids from exploring resources anonymously, but in some cases require their parents’ permission to visit supportive websites or find community online — forcing kids to come out to their parents, who might disapprove or bar access entirely.”

—Hildalyn Colon Hernandez and Omari Scott, New York Daily News, 12/03/23

Could Endanger Privacy by Putting Personal Data at Risk

New York’s digital media legislation could force online platforms to identify the age of their users, a requirement that forces users to turn over personal identifiable information. In order to detect any users who are minors, platforms would need to identify the age of ALL users – a massive encroachment on individual privacy. In fact, estimating the age of a user will require more data, acting contrary to data minimization efforts. For security reasons, many adults reasonably prefer not to share identifying information with online services – creating a dilemma for anyone online: turn over sensitive personal data or leave the online platforms where they connect with friends and family.

Could Unconstitutionally Restrict Access to Content

New York’s digital media legislation infringes the First Amendment by targeting minors’ access to protected speech and encroaching upon the editorial freedoms of online platforms.

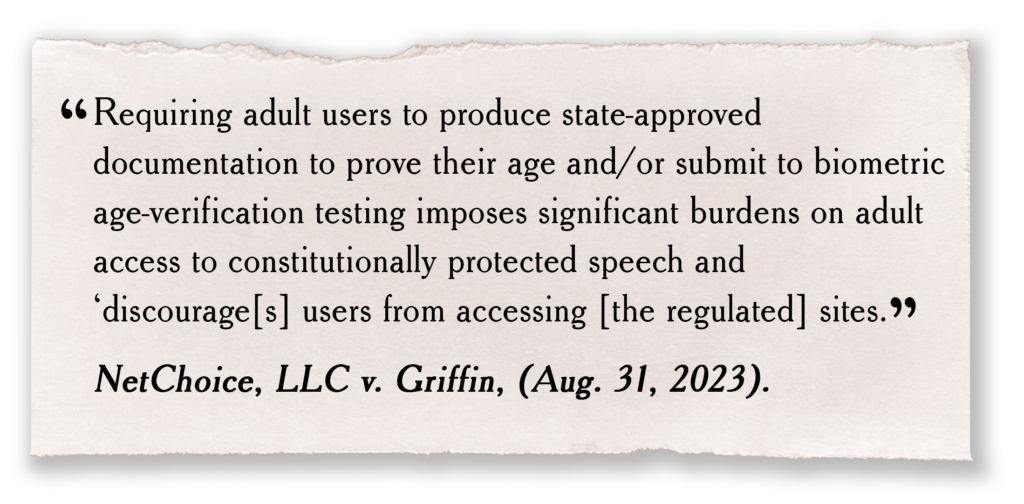

Age verification mandates compromise privacy and violate the First Amendment. Echoing the Supreme Court’s decision in Reno v. ACLU, courts have consistently ruled these mandates unconstitutional, as they indiscriminately limit access to protected speech for both adults and minors.

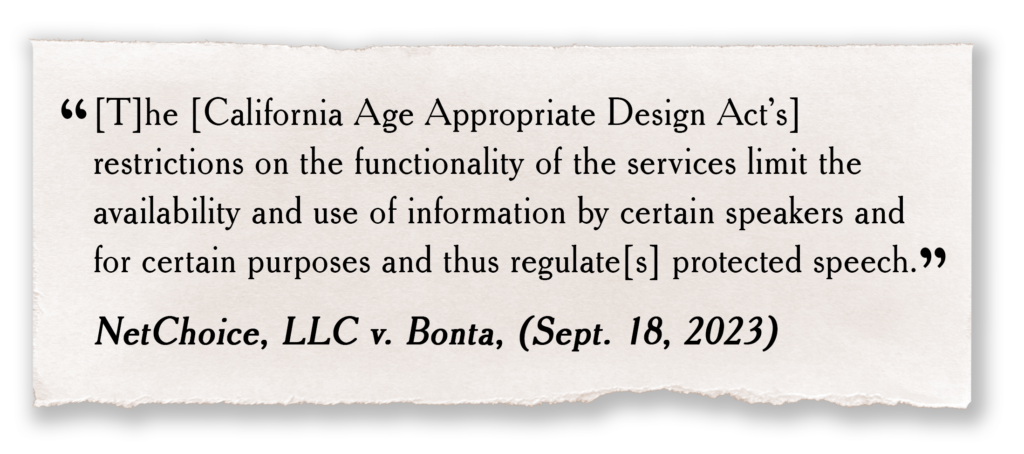

Moreover, the courts have consistently rejected the notion that laws like New York’s don’t affect speech. Recent cases in Arkansas, California, and Ohio demonstrate that laws targeting “social media design” inherently dictate content regulation, showing how attempts to control the mechanics of speech indirectly limit the speech itself.

In her analysis of the legislation, Chamber of Progress Senior Counsel Jess Miers unpacks these legal issues and concludes “the bill’s provisions not only challenge the essence of innovation but also raise significant constitutional questions, setting the stage for yet another legal battle that could render it obsolete.”